There is more to tell about the device

Yesterday, Apple finally unveiled its Vision Pro mixed reality headset . The presentation of the device lasted a very long time, and there were a lot of details. Now there are new ones that were initially missed.

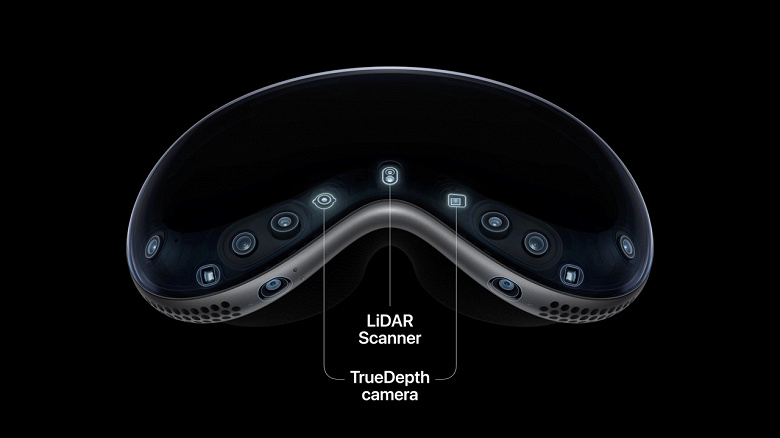

For example, the headset is equipped with a total of 12 cameras, four depth sensors, one lidar and six microphones. All this is necessary for mixing virtual reality with the outside world.

Six of the twelve cameras are under the front glass. Two of the six capture a high-resolution color image to provide that blend of reality, transmitting “more than one billion color pixels per second.”

Two cameras track the face and facial expressions, internal infrared cameras track the eyes.

Depth sensors, among other things, are needed to track the user’s hands. Recall that the Apple headset does not provide controllers for operation: control is carried out through gestures, gaze and voice commands.

Headset Apple Vision Pro received 12 cameras, lidar, depth sensors

Lidar, of course, is needed to orient the device in space. Apple claims the Vision Pro has excellent environmental awareness. So good that virtual objects, when projected onto real ones, cast the correct virtual shadows.

In order to reduce the load on the CPU and GPU, the headset uses Dynamically Foveated Rendering technology, which means that the image is displayed at full resolution only in the area that the user’s eye is currently falling on. The remaining areas are displayed with reduced resolution. The same solution is used in PS VR 2.

The Apple R1 processor in the headset is responsible for processing data from cameras, microphones and sensors. Among other things, this solution allows you to reduce the delay in displaying the image to 12 ms.

Apple did not disclose the exact value of screen resolution, but talked about 23 million pixels. With an aspect ratio of 1:1 or 9:10, which is often found in headsets, this means a resolution of about 3400 x 3400 or 3200 x 3600 pixels, respectively.