They are going to build nine more of these, in order to then combine them into a network.

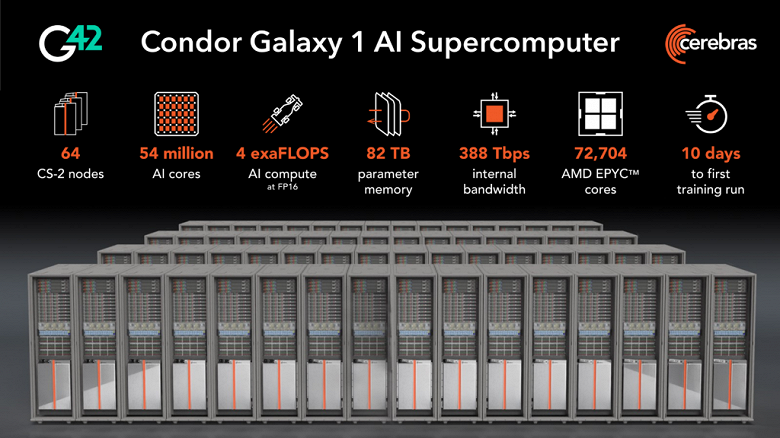

While a single system with a performance over 1 exaflops has been leading by a huge margin in the list of the most productive supercomputers for a year now, and the media are mainly discussing two systems under construction with a performance over 2 exaflops, Cerebras Systems and G42 unexpectedly introduced the Condor Galaxy 1 (CG-1) supercomputer with a performance of 4 exaflops! True, there are nuances here, and the supercomputer itself is extremely unusual and differs from any other familiar system of this class.

The specified performance is a metric in AI training tasks. And these are calculations with half precision, that is, FP16. There is no indicator for FP32, but the same Frontier supercomputer, which still tops the Top500 list, offers about 3.3 exaflops of power in the same mode. That is, apparently, CG-1 can be called the most productive supercomputer in the world. But even the creators themselves still say a little differently: this is the most productive supercomputer for AI training. With this formulation, there are no problems with correctness.

However, this is not all. The fact is that CG-1 knowingly contains a unit in the title. The Condor Galaxy 1 is just the first of nine identical supercomputers planned to be built by Cerebras Systems and the G42. And these will not be separate installations, but one connected network! Supercomputers CG-2 and CG-3 will be deployed in the US as early as next year, and the final performance of the entire system will be some incredible 36 exaflops. And here it is hardly possible to find fault with the fact that companies in their press release say that their network of supercomputers will revolutionize the development of artificial intelligence.

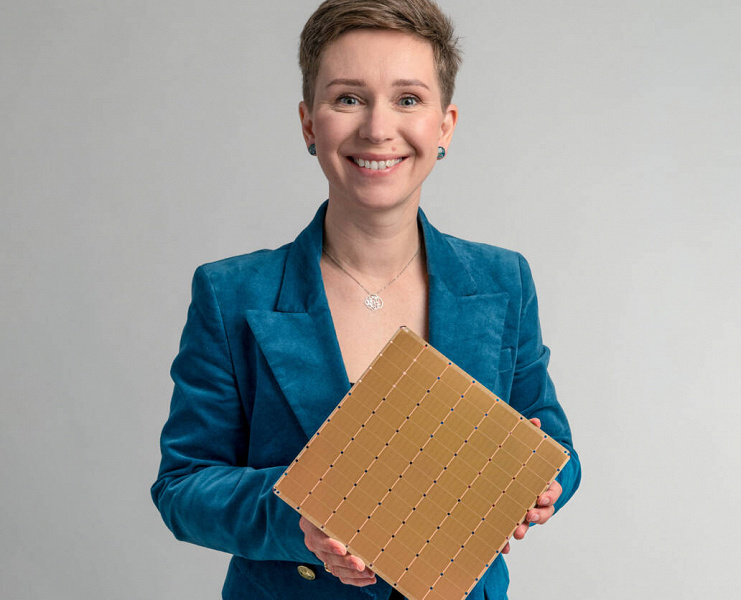

It’s based on just 64 chips, but each is the size of an iPad Pro

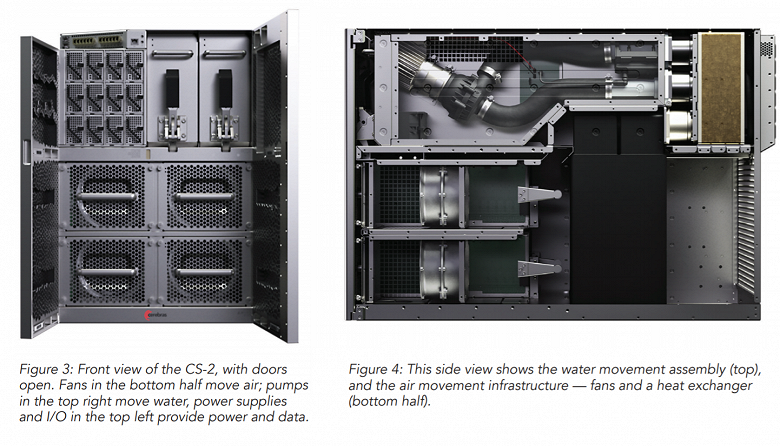

Here it is worth recalling that Cerebras Systems is a company that in 2019 amazed everyone with its iPad Pro-sized chip. The solution, called the Wafer Scale Engine, contained 1.2 trillion transistors and had the largest dimensions that could be squeezed out of a 300mm rectangular wafer. In 2021, the company introduced the second generation of this product (Wafer Scale Engine 2 or WSE-2) in the same dimensions, but the number of transistors has grown to 2.6 trillion thanks to the 7nm process technology. WSE-2 has an area of more than 46,000 mm2 , contains 850,000 cores and 40 GB of native memory with a throughput of 20 PB/s. The consumption of the chip itself is unknown, but a CS-2 system based on a single WSE-2 has a power of 23 kW.

Thanks to the colossal number of cores, only 64 chips were needed to build the most powerful supercomputer in its class. More precisely, 64 CS-2 systems, but each contains only one WSE-2 chip. As a result, the supercomputer has more than 54 million cores. For comparison: Frontier has 8.7 million cores.

Record performance allows developers to talk not only about 4 exaflops, but also about 600 billion parameters with the possibility of expanding the configuration to support up to 100 trillion parameters. By the way, the system configuration also includes AMD Epyc processors, but the company does not indicate the model. It is only known that the supercomputer itself contains 72,704 processor cores.

Cerebras and G42 offer CG-1 as a cloud service, so everyone can conditionally take advantage of the power of the new giant.

By delivering 4 exaflops of AI computation at FP16, CG-1 significantly reduces AI training time while eliminating the problems of distributed computing. Many cloud companies have announced massive GPU clusters that cost billions of dollars to build but are extremely difficult to use. Spreading one model among thousands of tiny GPUs takes months and dozens of people with rare experience. CG-1 eliminates this problem. Setting up a generative AI model takes minutes, not months, and can be done by a single person. The CG-1 is the first of three 4 exaflops AI supercomputers to be deployed in the US. Over the next year, we plan to expand this deployment with G42 to deliver a staggering 36 exaflops of efficient custom AI computing.

Separately, it should be said that the technical work lies entirely with Cerebras Systems. G42 is a technology group from the UAE that actually bought the hardware from Cerebras to build supercomputers.