It is based on eight Nvidia A100 accelerators.

We just figured out the Nvidia A100 accelerator and the GPU at its core, and also mentioned the DGX A100 station, based on the new accelerators. Now this station is officially presented.

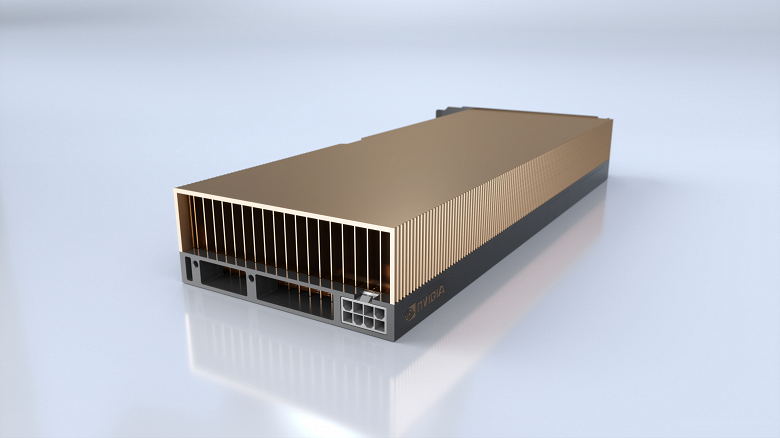

Nvidia DGX A100So, Nvidia itself calls the DGX A100 a universal system for all types of workloads related to artificial intelligence, providing unprecedented computing density, performance and flexibility. In addition, this is the first system of its kind with a performance of 5 PFLOPS. Recall, it costs 199,000 dollars.

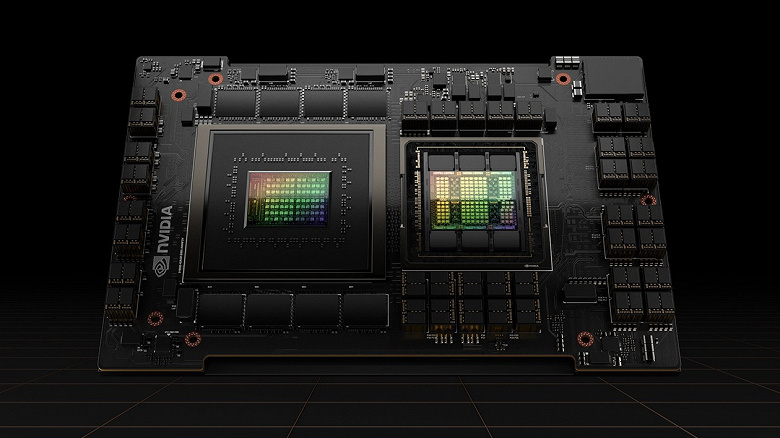

At the heart of the DGX A100 are eight Nvidia A100 graphics accelerators with 40 GB of memory each, that is, a total of 320 GB of video memory in the system. Also, the DGX A100 has 1 TB of RAM and, interestingly, AMD processors are based, whereas earlier, Nvidia used Intel CPUs for DGX stations. In this case, there is a pair of 64-core Epyc 7742.

Nvidia also notes the availability of nine Mellanox ConnectX-6 VPI HDR InfiniBand / Ethernet network adapters with peak bidirectional bandwidth of 450 GB / s. For data storage, a system with a capacity of 15 TB is used with a peak bandwidth of 25 GB / s. All this consumes about 6.5 kW of power and is enclosed in a case with dimensions of 264.4 x 482.3 x 897.1 mm.

In the previous news, we already talked about Multi-Instance GPU technology, which allows you to “divide” the GPU in the A100 accelerator into seven “separate” GPUs. MIG also works in the case of the DGX A100 station, allowing you to create up to 56 such GPUs, having allocated a separate task for each.

Of course, DGX A100 stations can be clustered. In particular, Nvidia is preparing a DGX SuperPOD – a system of 140 DGX A100 stations with a total capacity of 700 PFLOPS.