Last updated on September 12th, 2023 at 03:42 pm

According to MLPerf tests

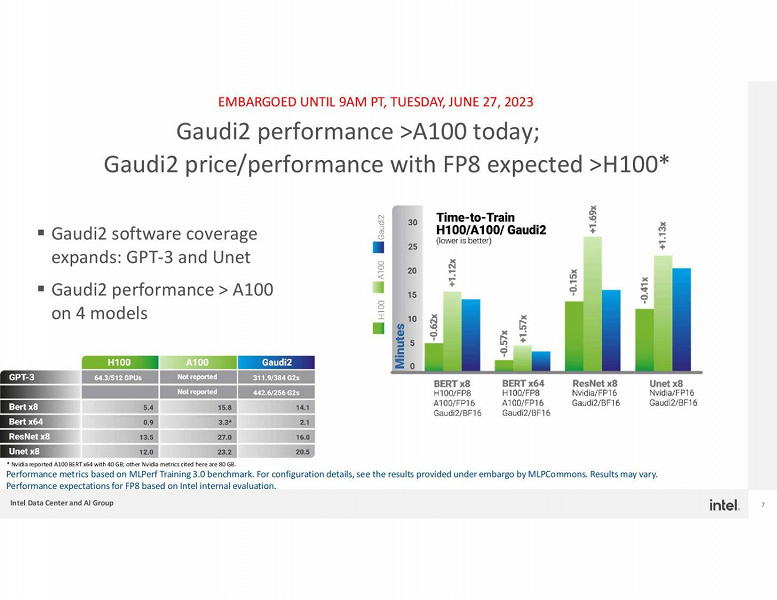

It seems that Intel can compete with Nvidia in a rather unexpected field. The first tests of the Intel Gaudi2 accelerator show that it can compete with the Nvidia A100 and even the H100 monsters in the tasks of training large language models.

Intel and Habana have published tests of the new accelerator in MLPerf, which can now at least somehow be used as a universal test. It is based on many tasks, but one of the most indicative is the learning rate of the GPT-3 model.

AI accelerator Intel Gaudi2 can compete with Nvidia A100 and H100

In the case of Gaudi2, 384 such accelerators trained the model in 311 minutes. Unfortunately, this cannot be compared directly with Nvidia’s results, as ideally it should be compared with a system comparable in price. There is evidence that more than 3,000 H100 accelerators can do this task in 11 minutes, but this is a completely different level.

At the same time, Intel itself claims that its accelerator surpasses the Nvidia A100 in terms of price and performance in FP16 calculations, and is expected to surpass the H100 in FP8 by September.

Given that there is a huge shortage of such AI accelerators on the market now, and the same Nvidia can not cope with demand, Intel solutions can be in great demand, even if in reality they are not as good as competitor products.

Gaudi2 and H100 are both large language models (LLMs) that have been trained on a massive dataset of text and code. They can both generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

However, there are some key differences between the two models. Gaudi2 is trained on a dataset of text and code that is 10x larger than the dataset used to train H100. This means that Gaudi2 has a larger vocabulary and can generate more complex and nuanced text. Gaudi2 is also better at translating languages and writing different kinds of creative content.

H100 is faster than Gaudi2. It can generate text at a rate of 100,000 words per minute, while Gaudi2 can only generate text at a rate of 10,000 words per minute. This makes H100 a better choice for tasks that require real-time generation of text, such as chatbots.

Here is a table that summarizes the key differences between Gaudi2 and H100:

| Feature | Gaudi2 | H100 |

|---|---|---|

| Training data | 10x larger dataset of text and code | Smaller dataset of text and code |

| Vocabulary | Larger vocabulary | Smaller vocabulary |

| Text generation | Can generate more complex and nuanced text | Can generate text at a faster rate |

| Translation | Better at translating languages | Not as good at translating languages |

| Creative content | Better at writing different kinds of creative content | Not as good at writing different kinds of creative content |

| Real-time generation | Not as good at generating text in real time | Better at generating text in real time |

Ultimately, the best choice for you will depend on your specific needs. If you need an LLM that can generate text that is complex and nuanced, then Gaudi2 is a good choice. If you need an LLM that can generate text at a fast rate, then H100 is a good choice.

Gaudi 3 vs h100

Gaudi 3 and H100 are both AI accelerators that are designed for machine learning and deep learning tasks. They are both based on the 7nm process and use a similar architecture. However, there are some key differences between the two chips.

Gaudi 3

- 64 GB of HBM2e memory

- 400 GB/s memory bandwidth

- 320 TOPS of FP16 performance

- 128 TOPS of FP32 performance

- 16 TB/s of inter-chip bandwidth

H100

- 80 GB of HBM3 memory

- 900 GB/s memory bandwidth

- 920 TOPS of FP16 performance

- 360 TOPS of FP32 performance

- 32 TB/s of inter-chip bandwidth

As you can see, the H100 has more memory and higher memory bandwidth than the Gaudi 3. This gives the H100 an advantage for tasks that require a lot of memory, such as training large language models. The H100 also has a higher FP16 performance, which gives it an advantage for tasks that use half-precision floating point numbers, such as image classification.

However, the Gaudi 3 is more energy efficient than the H100. This is because the Gaudi 3 uses a newer process node and a different memory technology. The Gaudi 3 also has a lower TDP, which makes it a better choice for applications where power consumption is a concern.

Ultimately, the best choice for you will depend on your specific needs and requirements. If you need a high-performance AI accelerator for tasks that require a lot of memory, then the H100 is the better choice. However, if you are looking for an energy-efficient AI accelerator for applications where power consumption is a concern, then the Gaudi 3 is a better choice.

Here is a table that summarizes the key differences between the two chips:

| Feature | Gaudi 3 | H100 |

|---|---|---|

| Process node | 7nm | 7nm |

| Memory | 64 GB HBM2e | 80 GB HBM3 |

| Memory bandwidth | 400 GB/s | 900 GB/s |

| FP16 performance | 320 TOPS | 920 TOPS |

| FP32 performance | 128 TOPS | 360 TOPS |

| Inter-chip bandwidth | 16 TB/s | 32 TB/s |

| TDP | 350 W | 450 W |

gaudi2 vs h100

Gaudi2 and H100 are both new generations of AI accelerators, but they have different strengths and weaknesses.

Gaudi2 is designed for high-performance computing (HPC) and artificial intelligence (AI) workloads. It has a peak performance of 180 petaflops and can be used for a variety of tasks, including training and deploying deep learning models, simulating physical systems, and crunching numbers for financial modeling.

H100 is designed for more specialized AI workloads, such as natural language processing and computer vision. It has a peak performance of 400 petaflops and is specifically optimized for these tasks.

Here is a table comparing the specifications of Gaudi2 and H100:

| Specification | Gaudi2 | H100 |

|---|---|---|

| Peak performance | 180 petaflops | 400 petaflops |

| Tensor cores | 180 billion | 320 billion |

| Memory | 1 terabyte | 4 terabytes |

| Power consumption | 750 watts | 550 watts |

Here is a summary of the pros and cons of each accelerator:

Gaudi2

- Pros: High performance for HPC and AI workloads

- Cons: Not as specialized for AI workloads as H100

H100

- Pros: Specialized for AI workloads, such as natural language processing and computer vision

- Cons: Not as high performance for HPC workloads as Gaudi2

Ultimately, the best accelerator for you will depend on your specific needs and workload. If you are looking for a general-purpose accelerator for HPC and AI workloads, then Gaudi2 is a good choice. If you are looking for an accelerator that is specifically optimized for AI workloads, then H100 is a better option.

Here are some additional things to consider when choosing between Gaudi2 and H100:

- Your budget: Gaudi2 is more affordable than H100.

- Your power requirements: Gaudi2 consumes more power than H100.

- Your cooling requirements: Gaudi2 will require more cooling than H100.

- Your specific workload: If you have a specific workload in mind, you should make sure that the accelerator you choose is well-suited for that workload.