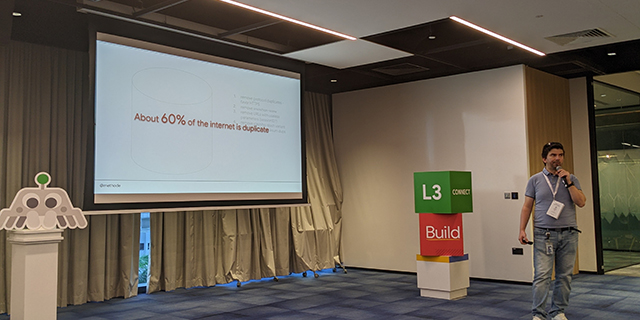

At the Google Search Central Live event in Singapore, Google shared some interesting information about what the Internet is like now:

About 60% of the Internet is duplicated.

At the same time, it seems that it is not only about the fact that some data is simply copied and placed somewhere else. It’s more about the different settings of sites in which there are copies. At least Google explains how to solve the issue of data duplication.

- Remove duplicate protocols – favor HTTPS.

- Remove www/non-www.

- Remove URLs with useless parameters.

- Remove the slash/no slash option.

- Remove other duplicate checksums.

All this will help to better optimize resources and make the work of search engines on sites better and more correct.